Below: A map of the existing rigorous ‘what works’ evidence about institutional responses to child abuse, and summary of what that evidence says

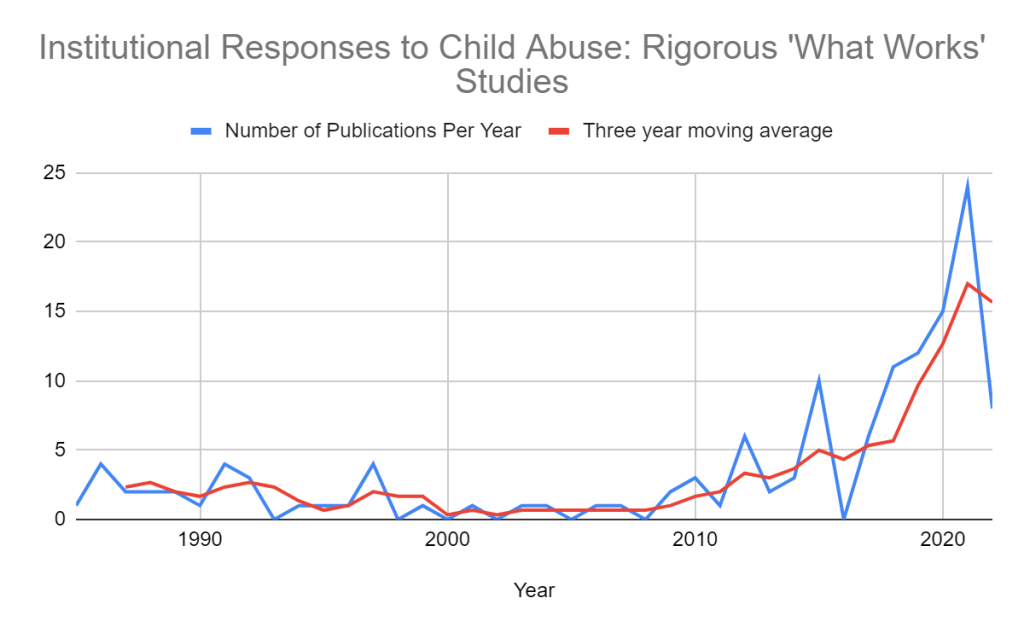

Giving Evidence and partners have produced a map of the existing evidence (and gaps in it) about the effectiveness of institutional responses to child maltreatment, and a ‘Guidebook‘ which summarises what that evidence says. This document summarises them both. An article in Alliance Magazine written with the funder (Porticus) describes both products, the rationale for them, and also Porticus’ learning from its own daily work. These products were first produced in 2020, and updated versions produced in 2023 – with many more studies! (see below)

What’s included? We looked for effectiveness studies. We included: any primary study with a decent counterfactual (so RCTs and some quasi-experimental designs), and systematic reviews, in settings such as organisations such as schools, youth clubs, sports clubs, churches, and residential care. We included studies from any country and published at any point in time; both academic and non-academic studies; and studies about prevention, encouraging disclosure, response (e.g., legal sanction), and treatment of survivors.

The evidence and gap map. Below is a picture of our map. We ran a systematic search for the kinds of studies that we were including, screened them all for relevance, and then categorised them. We put them on a grid, in which the rows are interventions (from the top: prevention, disclosure, response, treatment), and the columns are the outcomes. Each study that we include is placed in the cell(s) to which it relates (e.g., if the study looked at the effect of Intervention 2 on Outcomes 3 and 4). This is the standard structure of an evidence gap map. As you can see, much of the map is blank, but there are a few marked concentrations. A detailed report about the map is here.

We also made an interactive version of the map. It includes summaries of the evidence: for cells with one or two completed primary studies, there are summaries of the studies; and for cells with 3+ studies, there are syntheses of the studies.

Recent years have seen many more rigorous studies published in this area: (NB, interpreting this needs a bit of caution. Sometimes studies take ages to be published. One published in 2021 gathered its data in 2013! – perhaps researchers used Covid lockdowns to get around to writing up and publishing studies, rather than these all being actual recent studies.)

Giving Evidence and Porticus did a webinar with the Campbell Collaboration in which we talked through this whole project: the logic of why the funder started this, how we made the EGM, what it found, how we made the Guidebook, what that found, the interactive version of both, and what we are doing next. Plus questions from audience members.

What this evidence and gap map shows:

- 108 completed primary studies, and 20 systematic reviews. There are also eight protocols. Of the 108 completed primary studies, 79 are RCTs.

- The studies don’t at all match where the world’s population is: none from India, only three from China, and only 19 from Africa.

- The evidence is concentrated -though much less so than in our first iteration, three years ago: mainly in education-based prevention programmes, in early education and school settings. Fully 60 of the 108 completed primary studies look at that.

- Most of the studies are about sexual abuse. Sexual abuse was considered by 93 of the studies.

- 38 studies reported on physical abuse, 19 on neglect, and 27 on emotional abuse. .

- Most of the studies are about prevention. Prevention was examined in 120 papers (some studies generate more than one paper).

- Treatment was studied in only a handful of primary studies. On disclosure, we found three primary studies of interventions aiming to facilitate disclosure: last time, we found none.

- No completed study has assessed interventions with adults to stop them offending within organisations

- Only few studies focus on children particularly at-risk

- No causal studies conducted in religious organisations

- Almost all the studies have appreciable risk of bias i.e., the ‘answers’ that they report may be wrong. The colours on the map above indicate risk of bias: red is high, and green is low.

- Only one study had educational attainment as an outcome

What the evidence says. We have produced a ‘Guidebook‘ which summaries the studies, and provides guidance for using the evidence.

Full report about the map. The full report is here which discusses the findings in detail.

How does the evidence align to activity within child protection? We also explored finding data about activity in child protection (e.g, what schools are doing, governments, youth clubs etc.) and mapping it to the EGM frame – to identify where there is lots of activity but no evidence (=priority for new evidence) and areas of little activity but where the evidence is clear that something works (=areas to encourage more activity). Basically, that didn’t work because the evidence about child protection activity is so scant (=important research gap!). Our report into this exploration is here.

Context

Clearly, many nonprofits and other types of institution are working on improving safe-guarding and child safety, and the issue has gained prominence because of the allegations about aid workers in Haiti, etc. It therefore seems important to establish what is already known and what still isn’t known about what interventions are effective at improving child safety in various situations. That should enable (i) delivery organisations and funders to make evidence-based decisions, where there is sound evidence, and (ii)researchers to prioritise producing evidence in the important gaps which still exist. To be clear, our study is a review of the existing evidence: we did not produce fresh primary research.

An academic version of our report has now gone through peer review with the Campbell Collaboration, and is here. It explains precisely what we did (search, screening, coding etc.) A non-academic version is here and summary of the findings is here.

What evidence & gap maps are

Evidence Gap Maps (EGMs) consolidate what we know and do not know about ’what works’ in a given area– in this case, child abuse within institutions. They show where there are systematic reviews and impact evaluations, and provide a graphical display of areas where evidence on this topic is plentiful, sparse or non-existent.

EGMs show what research already exists within your scope (above a certain quality threshold); they do not show what that research says. They’re like real maps, which show where the pubs are but not what they serve 😉

For example, you may want to ensure that the institutions that you fund have done and are doing everything possible to prevent child abuse. You’re aware that many organisations put in place safe-guarding policies. But you are not sure whether safe-guarding policies actually have any effect on the levels of abuse. This EGM did not find that out, but it does show whether anybody has yet looked at that question, and if they have, the geographies and types of institutions that they examined, and the research methods that they used (which matters since some research methods are more reliable than others). That may answer your question, or you may want to commission new research into it. The ‘guidebook’ (see below) shows what the evidence says.

This makes EGMs useful for policymakers, funders and practitioners looking for evidence to inform policies and programmes. For donors and researchers, these maps can inform a strategic approach for commissioning and conducting research. EGMs are not intended to provide recommendations or guidelines for policy and practice but are meant to be sources that inform policy development and guidelines for practice.

This map looks only at studies of ‘what works’, i.e., the effect of some intervention(s) on some outcomes(s) for particular groups. Our map therefore does not include non-causal studies, such as studies of prevalence of abuse, attitudes, activities within organisations (eg., to reduce abuse or encourage disclosure).

This video explaining evidence and gap maps was produced by the Centre for Homelessness Impact, the UK’s ‘what works centre on homelessness:

The research was undertaken with the Campbell Collaboration and Global Development Network.

Next steps

We are talking to various funders, practitioners, researchers and others about prioritising topics on which to produce more research, and then getting that produced to fill key gaps and inform practice.

Why understanding impact involves knowing what would have happened otherwise—>