UK charitable foundation staff and trustees are very white and very male. They’re also often senior in years, and pretty posh. None of those characteristics is necessarily a problem of itself, but (a) the homogeneity creates risk of lacking diversity of views, experiences and perspectives. Increasing diversity has been shown by many studies and circumstances to lead to better decisions and better results – including on climate. And (b) foundation staff and boards may collectively have little experience of (and hence, good understanding of) the problems they seek to solve, and insights into the communities they seek to serve.

A group of UK grant-making foundations aims to change this, so has funded the Foundation Practice Rating, an annual assessment of UK fdns on their practices on diversity, transparency (e.g., how easy it is to find out what they fund and when) – and accountability (e.g., whether they have a complaints process, whether they cite the basis on which they assess applications and make decisions, whether they publish any analyses of their own effectiveness). Giving Evidence designed and runs the research and does the assessments.

Read an article in Alliance Magazine announcing this project, and a second article with some early findings.

The results from the first three years are published:

2022 results: are summarised here, and the full report is here. Watch the launch event in which we discuss the results, here.

2023 results: are here. Again the launch event in which we discuss the results, here.

2024 results are here, and the launch event is here.

We are running the research again during Autumn 2024 and will publish the Year Four results in Spring 2025. For our Year Four (2024/25), we have to change the way that we select our cohort of foundations, because the list which we used hitherto has ended: the way that we will draw our cohort for Year Four is described in this paper.

Recent press coverage includes; in Ethical Marketing News; in Charities Management magazine; in Circle, a network and resource for donors in the Middle East.

The criteria remain pretty much unchanged since 2021: details here. We run a consultation process before the research each year. This document shows the responses for the 2024 consultation, and the changes that we will make as a result. (In short: adding a scoring criterion around fnds being Living Wage Funders – we already assessed whether they are Living Wage Employers. On some of the issues raised, we will consider making changes in future years: that delay is to avoid chopping and changing.) We have to make small changes to the criteria around investment policies: this is due to the Charity Commission changing its guidance on them. The changes and our new criteria are explained here.

We consulted on the criteria before we started the Year One research (and before each year since). Our criteria are drawn in part from other rating schemes, such as: GlassPockets’ Transparency Standard, Give.Org’s BBB Standards for Charity Accountability and the Funders Collaborative Hub’s Diversity, Equity and Inclusion Data Standard. They are also informed by public consultation we ran in the summer 2021. For Year Two (2022/23) we did a second public consultation. Below are the criteria we started with – though some turned out not to work.

Giving Evidence does and publishes this rating annually. Our research and assessment uses only a foundation’s public materials (specifically, its website and annual reports). This is because we are taking the stance of a prospective grantee – who may be deterred if a foundation looks too different from them.

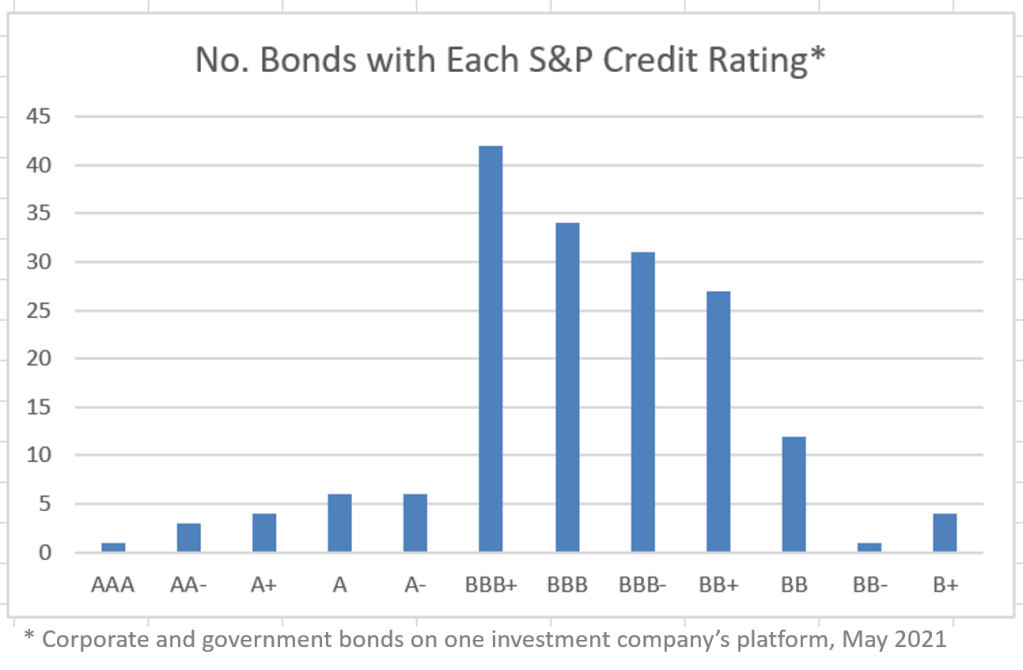

Please note that this is a rating. It is not a ranking. That is so that improvement isn’t zero-sum: everybody can rise or everybody can fall. Equally, everybody can get an A or everybody can get a D. It is also not an index (which shows how the whole pack moves over time). Unlike a ranking or index, a rating is an absolute measure. There is no pre-determined distribution across the ‘grades’ because that would make it zero-sum. This is the same as for commercial bond ratings: see graph below.

What are the criteria, and how can a foundation do well on them?

Before we gathered any data for Year One (2021), we published our ‘starting criteria’, downloadable here. These are the criteria with which we started: we expected that some would turn out to be ambiguous, duplicative, or unworkable, so might vary a bit from the final list – which they did. That document includes the provenance of each criterion. The eventual list that we actually used is in the report above, and here.

We also published guidance for any foundation on doing well on the rating. For example, one criterion is around having an accessible website, so we included guidance on how to do that.

Foundations are exempt from questions irrelevant to them, e.g., a foundation with no / few staff is exempted from reporting pay gap data, and a foundation with small investment is exempted from reporting its investment policy.

Whose idea was this and who is funding it?

The project is funded by various UK foundations, led by Friends Provident Foundation. Other funders include: Barrow Cadbury Trust, John Ellerman Foundation, Joseph Rowntree Reform Trust, Joseph Rowntree Charitable Trust, Indigo Trust, John Lyon’s Charity, City Bridge Trust, and Paul Hamlyn Foundation. These funders meets periodically to advise on the project, and the Association of Charitable Foundations joins those meetings. The funders do not have any ‘editorial influence’ e.g., over the results of the research. The project draws on various social movements that have gained momentum over the years, for example, Black Lives Matter, Equal Pay, Disability and LGBTQ+ rights.

Giving Evidence has worked on these issues before. For example, in this study, we looked at how many UK and US charities and grant-making foundations have public meetings (e.g,. Annual General Meetings) or decision-making meetings in public. (Answer: very few.) We were inspired by the 800-year-old City Bridge Trust in London, all of whose grant-making meetings are in public, and Global Giving, which has a public AGM. Caroline also wrote in the Financial Times about the lack of demographic diversity on foundation boards and some ideas for improving that.

Will this include all UK grant-making foundations?

No, simply because they are more than the resources allow. Each year, we assess 100 foundations. They are:

- All the foundations funding this project. They are all trying to learn and improve their own practice. This project is not about anybody hectoring anybody else. In Year One, 10 foundations funded the work. And:

- The five largest foundations in the UK (by grant budget). This is because they are so large relative to the overall size of grant-making: The UK’s largest 10 foundations give over a third of the total given by the UK’s largest 300 or so foundations; and giving by the Wellcome Trust alone is 12% of that. We also know that the transactions that many charities experience cover the range of foundation sizes.

- A stratified random subset of other foundations. We are including all types of charitable grant-making foundation, e.g., endowed foundations, fund-raising foundations, family foundations, corporate foundations, community foundations. We have not included public grant-making agencies (e.g, local authorities or the research councils) because they have other accountability mechanisms. Our set is a mix of sizes: a fifth from the top quintile, a firth from the second quintile, and so on. The set is chosen at random from the list of the UK’s largest 300 foundations* as published in the Foundation Giving Trends report 2019 published by the Association of Charitable Foundations, plus the UK’s community foundations.

*In fact, that report details 338 foundations. We are looking at those, plus 45 community foundations (the 47 listed by UK Community Foundations minus the two for whom no financial information is given), ie., 383 foundations in total.

For subsequent years, we will rate foundations in categories (1) and (2) above, and will do a fresh sample for (3).

I’m a UK foundation. Can I opt into this?

Yes, by becoming a funder of the project. As mentioned above, all the project’s funders are included. Beyond them, our selection will be random.

You can also opt to be rated by paying a small fee – even if you are not in the set to be rated. You will be researched alongside all the other foundations, and get your results – which will be published – but you will not be included in the main sample of 100 (to avoid selection bias).

Pls get in touch about either of these.

Where do the criteria already under consideration come from?

They come from two sources. First, our own experience over many years with many funders (and in many countries). Second, we are borrowing some ideas from similar initiatives such as Transparency International’s Corporate Political Engagement Index, the “Who Funds You?” initiative for think tank funding transparency, the Equileap Global Gender Equality Index, , ACF Transparency & Engagement, Stonewall Workplace Equality Index and ShareAction’s annual ranking of fund managers.

Who conducts the research and analysis? How is done?

Giving Evidence does the research and analysis. We have a team of researchers, all with experience of charities and funding, and with diverse backgrounds. They are also demographically diverse. Each foundation is assessed on the criteria by two researchers independently. Foundations will be allocated to researchers at random. The whole research process is overseen by Enid Kaabunga, our Research Manager. Where the two researchers’ scorings differ, there is a discussion between them and Sylvia to understand and resolve the difference: it is sometimes necessary for a third researcher to assess the foundation on the disputed criteria, and if so, they are ‘blind’ to their colleagues’ assessments. This is a standard process used in many situations, e.g., for marking university exams, assessing research applications.

We then send each included foundation the data about itself for it to check. (We used the contact details that it provides publicly.) Once we had a data-set that we were happy was correct, we turned the data into scores: each foundation gets a numerical score on each of the three pillars. The total points available varies between foundations because of their exemptions. From that score and the total available, we calculate a % score on each pillar for that foundation, and that translates into their A/B/C/D rating on that pillar. The combination of those pillar scores translates into the foundation’s overall rating.

The whole project is overseen by Giving Evidence’s Director Caroline Fiennes. Details of our team are here. Masses of detail about the method is in this Twitter thread and the report above.

When does Giving Evidence expect to publish the findings each year ?

Each year, the research is done during August – November; the analysis is done in December-February; and we publish the results in March.

How do I sign up to get updates on this project?

Sign up to Giving Evidence’s newsletter, here, and watch the Twitter feeds of Giving Evidence’s Director Caroline Fiennes here and the Foundation Practice Rating here.

What is the timetable on what will happen next?

We are soon running the research for Year Four. We expect to publish the Year Four results in Spring 2025.

Can Giving Evidence do this analysis for foundations based in other countries?

Actually, it needs to be run by somebody in-country, who really knows the foundations, data and regulatory requirements in that country. We’re happy to help you do it. You will need funding, lol. Contact us here.

Further information is available on the Foundation Practice Rating website.