‘Evidence is not the plural of anecdote’, wags often say. Sure, but what is it?

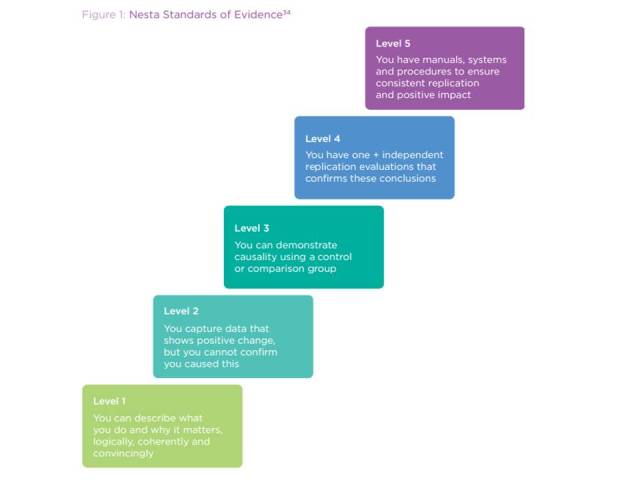

Evidence comes in many forms, some distinctly better than others. Below is a hierarchy produced by NESTA. Is it any good?

Level 1 is essentially having a plausible theory of change. This is a rather odd inclusion on a hierarchy of evidence because it’s not evidence: it’s a story. Still, a coherent theory of change is (usually) necessary for effectiveness.

Level 2 is some supportive-looking data. Notice that this is as far as most charities & donors ever get.

Level 3 requires ‘demonstrating causality’: this NESTA (rightly) says requires a control group. Notice that very few charities or charitable donors get this far. Notice too that it’s often impossible to have a control group: if you run a national campaign, or work to change the law, then you’re working on a sample of 1 (there’s only one nation, or one set of laws). If you work on a very rare disease, you may have too few people in the sample for the work and any comparison group to be statistically significant. So, interestingly, under NESTA’s view, you can never get very good evidence on those numerous cases. I’d have expected Level 3 to require that the study be robust, e.g., that the comparison group is actually comparable (selection bias having been removed by randomising, and removing other biases e.g., survivor bias, and cross-over effects etc.), and that the treatment and control groups be statistically significant.

Level 4 requires having studied the process in more than one location/times, and having the studies conducted independently. This is useful to ensure that the result is robust and real: repeatability is crucial to any scientific discovery (we can all claim that we got cold fusion to work in our bathroom last Tuesday but mysteriously can’t do it again). Note that the studies don’t have to be in different locations: despite deworming children not increasing school attendance in Scotland, it does help in India, so we need to be a bit careful that the replication studies are really relevant.

And it’s obviously important that the results be independently verified – though again, the overwhelming majority of ‘evidence’ used by charities and donors isn’t, and hence may well be garbage.

Level 5 is frankly a surprise. Having a manual on how to do something doesn’t, to my mind, constitute evidence: it’s just a manual. That may be useful for implementation, but it doesn’t of itself add to the certainty that the intervention actually works.

What I’d expect to see as the top level is a systematic review / meta-analysis: that analysing all the studies of this intervention as though they were one (amalgamating the samples of all of them), giving more weight to big studies than to small ones, produces a positive result. This is a guard against cherry-picking: often some studies will show an intervention to work, and others show it to not work. That situation is pretty confusing for a practitioner who might conclude that the evidence base is inconclusive. Not so! Often, a meta-analysis will show that the answer is there, just hidden. Medicine is awash with such examples – where interventions looked alright, but careful analysis showed that they were in fact fatal. (The analysis can be shown in a ‘blobogram’ shown here.)

Hence medicine would put systematic reviews at the top of the hierarchy of evidence: and they are the most frequently cited form of clinicial research. Medicine has a huge global network entirely dedicated to doing them – The Cochrane Collaboration – and they also happen in disaster & emergency relief, and international development via 3ie and others.

Notice that you could get to the top of NESTA’s hierarchy with a manual for doing something for which the evidence looks mixed – even if that mixture actually contained proof that the intervention is harmful, and even fatal.

Getting charities and donors to the point that their data can be scrutinised by systematic reviews is tough, but we must. These are the most rigorous form of evidence, and hence I’m delighted to be on a board of The Cochrane Collaboration.

Actually, ‘what is our impact’ is the wrong question. Here’s why–>

Pingback: Solnit and Nesta: Evaluating intervention | Solving for Pattern

Pingback: Good charities spend more on administration than less good charities spend | Giving Evidence

Pingback: Most Charities Shouldn’t Evaluate Their Work: Part Two: Who should measure what? | Giving Evidence

Loved this post. Which board of the Cochrane Collaboration are you on?

I’m on the ad.board of Evidence Aid, the bit of Cochrane which deals with medical care after disasters & emergencies (tsunamis, earthquakes etc.) I explain here why I joined: https://giving-evidence.com/2013/01/16/why-im-delighted-to-join-the-advisory-board-of-evidence-aid/

Ah great – that makes sense! Hello!! I work for Cochrane (in the same office as Claire Allen from Evidence Aid). I am particularly passionate (to put it mildly) about getting laypeople (including schoolchildren, not just the tranditional “consumers” – hate that word) involved with Cochrane and the evidence synthesis process by giving their time to do hands on work – eg. coding trial reports, tracking unpublished studies etc. so that they learn how important it is. Altruistic work for most, but for kids a great CV booster, and what an educational activity. Ben Goldacre always lamenting that EBM is not taught in schools…well it could be!

Pingback: Are we relying on unreliable research? | Giving Evidence